Why Docker and what is it?

Docker is becoming the de facto standard in the container industry and its popularity is growing steadily from day to day. According to Docker, over 3.5 million applications have been placed in containers using Docker technology and over 37 billion containerized applications have been downloaded. You will be surprised, how many popular technologies are running in Docker: NGINX, Redis, Postgres, Elasticsearch, MongoDB, MySQL, RabbitMQ – and it is even not a full list!

Obviously, you can work without Docker. Many applications run successfully without it and everything seems to go fine. Usually, behind the scene, a situation is quite different. If you are a developer or a DevOps, you really understand that it is not so easy and good as it seems at first glance. How many times have you worked on some features and everything did great on your local machine, and right after deploying to the staging or production, you realize, that something went wrong. With Docker, you will never hear about such problems, because the packaged Docker application can be executed on any supported Docker environment, and it will run the way it was intended to on all deployment targets. You can develop & debug it on your machine and then deploy it to another machine with the same environment, guaranteed.

Here, at Redwerk, we use Docker quite often in recent times for both in-house projects, and custom ones. Saying that, we have learned from our own experience that using Docker can make your life easier.

With the help of the docker, we successfully reduced the downtime of our test sites during the deploy with Docker ability to use the current container while a new build is being created. This definitely assists in various cases: your QA team will not wait until you deploy new changes; your customers will not even understand that something was deploying while they were using your website. In the modern world, this is a rather important issue.

Docker allows to package together an application or service, its dependencies, and configuration, as portable, self-sufficient container image that can run on the cloud or on-premises. In short, containers offer the benefits of isolation, portability, agility, scalability, and control across the whole application lifecycle workflow. It allows us to package our applications with all their dependencies in a Docker container and conveniently deploy it on test or working environments. It simplifies our development process, facilitates the transition of the project work to another machine, and allows to reduce the time of inclusion of new developers in a project .

Docker sometimes sounds like a Virtual Machine, and there are a lot of similarities between two of them. In particular, they both allow to create an image and scale it to a few instances, that safely work in isolation among each other. However, containers have more advantages which make them more appropriate for building and deploying applications.

Unlike virtual machines, containers share the OS kernel and all libraries with each other, they do not require full guest operating system and run as isolated processes (one exception is Hyper-V containers). Because of that, they are easy to be deployed and they start really fast, and this is ultimately important for production. Besides, containers are more lightweight than virtual machine images. Containerized applications are easy to scale because containers can be added or subtracted quickly from an environment. It’s like instantiating a process like a web app or service. Developers can use different development environments: on Mac, Linux you can use Linux images, on Windows – either Linux or Windows. It does not impact on containers consistency, so they are more portable.

Virtual machines still have an important role, as they are very often used for running containers among virtual machines – including when using the cloud infrastructure from vendors such as Amazon, Google, and Microsoft.

Docker terminology

This is a short list of Docker terms, which is needed to understand some details and to get deeper into Docker.

Container image: docker images are the basis of containers. An Image is an ordered collection of root filesystem changes and the corresponding execution parameters for use within a container runtime (in other words, all dependencies plus deployment and execution configuration). An image typically contains a union of layered filesystems stacked on top of each other. An image is immutable and does not have a state.

Container: is a runtime instance of a Docker image. It consists of an image, an execution environment and a standard set of instructions. According to Docker, the concept is borrowed from shipping containers, which define a standard to ship goods globally. Docker defines a standard to ship software.

Tag: a label, which you can apply to images so that different images or versions in a repository to be distinguished from each other.

Dockerfile: a text document that contains all the commands you would normally execute manually in order to build a Docker image. Images can be built automatically by Docker, using these instructions.

Repository: is a set of Docker images. A repository can be shared by pushing it to a registry server. It can contain multiple variants of a specific image (platform variants, heavier or lighter variants of an image).

Docker Hub: is a centralized resource for working with Docker and its components. It provides image hosting, user authentication, the automated image builds and workflow tools (like build triggers and webhooks), integration with GitHub and Bitbucket.

Kitematic: a legacy GUI, bundled with Docker Toolbox, for managing Docker containers.

Cluster: a collection of Docker hosts exposed as a single one. Can be created with Docker Swarm, Mesosphere DC/OS, Kubernetes, and Azure Service Fabric.

Orchestrator: a tool that simplifies management of clusters and Docker hosts. Orchestrators include a number of features, which allow managing images, containers, and hosts, container networking, configurations, load balancing, service discovery, high availability, and more. Typically, orchestrator products are the same products that provide cluster infrastructure.

.NET Core vs .NET Framework for Docker Containers

There are two supported frameworks for building server-side containerized Docker applications with .NET: .NET Framework and .NET Core. There are fundamental differences between them, and the answer to the question “Which framework should be used?” will depend on what you want to accomplish. So, let’s talk about what to choose – .NET Core or .NET Framework for Docker Containers.

When .NET Core should be used for Docker containers

The fastest answer will be based on the advantages listed above, you obviously should choose .NET Core when you need cross-platform, fast, lightweight platform and if your application architecture is based on microservices. But let’s deep dive into the details.

So, the first point is that you should use .NET Core if your goal is to have an application that can run on multiple platforms. No need for explanations – if you need to deploy server apps with Linux or Windows container images, your choice is obvious.

Secondly, when your goal is to create and deploy microservices on containers – your preferred choice is .NET Core. And again it can be explained by platform lightweight. A microservice is meant to be as small as possible: to be light when spinning up, to have a small footprint, to have a small Bounded Context, to represent a small area of concerns, and to be able to start and stop fast. For those requirements, you will want to use small and fast-to-instantiate container images like the .NET Core container image is. In contrast, to use .NET Framework for a container, you must base your image only on the Windows Server Core image, which is a lot heavier than the Windows Nano Server or Linux images, that you use for .NET Core, are.

Thirdly, if it is important to you to have a regular update of packages. Since your project has regular updates, thus saving money and lowing risks as well, hence this point has a pivotal role. .NET Core provides the ability to install different versions of the runtime within the same machine. This advantage is more important for servers or VMs that do not use containers, because containers isolate the versions of .NET that the app needs. Of course, if they are compatible with the underlying OS.

The last but not the least, when you have a container-based website, your best choice would be .NET Core and ASP.NET Core for technologies stack to get the best possible density, granularity, and performance for your system. ASP.NET Core is up to ten times faster than ASP.NET in the traditional .NET Framework. And, also, back to microservices architectures, it is especially relevant for them. When you have hundreds of microservices (containers) running, or you plan to increase their number in the future, with ASP.NET Core images (based on the .NET Core runtime) on Linux or Windows Nano, you can run your system with a much lower number of servers or VMs, ultimately saving costs in infrastructure and hosting.

When .NET Framework should be used for Docker containers

.NET Core has a lot of benefits, but despite this, .NET Framework still is a good choice for many existing scenarios.

Certain NuGet packages need Windows to run and might not support .NET Core. And despite the fact that third-party libraries are quickly embracing the .NET Standard, which enables code sharing across all .NET flavors, including .NET Core, such problem still exists. If those packages are critical for your application and strongly required, then you will need to use .NET Framework on Windows Containers. For some packages, the problem is already resolved, because the Windows Compatibility Pack was recently released to extend the API surface available for .NET Standard 2.0 on Windows. This pack allows to recompile most existing code to .NET Standard 2.x with little or no modification, to run on Windows. But the chance that exactly your package will not be compatible still exists and in this situation, your choice is definitely .NET Framework.

Some .NET Framework technologies are not available in the current version of .NET Core (version 2.1 as of this writing). Some of them will be available in later releases, but others might never be available. Here is the official list of the most common technologies not found in .NET Core from Microsoft:

- ASP.NET Web Forms applications: ASP.NET Web Forms are only available in the .NET Framework and ASP.NET Core cannot be used for them. There are no plans to bring ASP.NET Web Forms to .NET Core.

- ASP.NET Web Pages applications: they aren’t included in ASP.NET Core. ASP.NET Core Razor Pages have many similarities with Web Pages.

- WCF services implementation. Even when there’s a WCF-Client library to consume WCF services from .NET Core, WCF server implementation is currently only available in the .NET Framework. This scenario is not a part of the current plan for .NET Core but it’s being considered for the future.

- Workflow-related services: Windows Workflow Foundation, Workflow Services (WCF + WF in a single service) and WCF Data Services are only available in the .NET Framework. There are no plans to bring them to .NET Core.

- Language support: Visual Basic and F# are currently supported in .NET Core, but not for all project types. They both are supported (at the moment of this writing) for the Console application, Class library classlib, Unit test project mstest, xUnit test project. And F# is also supported for ASP.NET Core empty, ASP.NET Core Web App (Model-View-Controller) and ASP.NET Core Web API. All of the others are not supported.

And obviously, if you have a stable project, with no needs to extend and it has no problems, for now, you really do not need to migrate your project to .NET Core. A recommended approach is to use .NET Core as you extend an existing application, such as writing a new service in ASP.NET Core. You might use Docker containers just for deployment simplification. For example, containers provide better isolated test environments and can also eliminate deployment issues caused by missing dependencies when you move to a production environment. In cases like these, it makes sense to use Docker and Windows Containers for your current .NET Framework applications.

So, why .NET Core is so cool?

Now, when we discussed the major pros and cons of both frameworks, we want to share some more details on why .NET Core is so good and what you need to know to work with it.

The .NET platform was introduced in 2002 and it has undergone tremendous transformations since then. For a long time .NET was a multi-platform environment, but not cross-platform and mostly a Windows thing.

.NET Core was a new breath of the .NET platform. It is completely rewritten from scratch in order to be an open source, modular, lightweight and cross-platform framework for creating web applications and services that run on Windows, Linux, and Mac. The following are key facts you should know about Microsoft’s .NET Core.

.NET Core is a cross-platform. If you are used to thinking that .NET platform is designed for Windows only, then now, with .NET Core, the reality is being changed. This framework already runs on Windows, Mac OS X, and Linux. In our company, we have a successful ASP.NET Core project, within which our team uses different OS platforms: the frontend team uses Linux OS for development, one of our backend developers uses MacOS, and another two developers work on Windows machines. And all works like swiss mechanism, without a hitch as if everyone had the same operating system. If a couple of years ago it would seem as something unbelievable, nowadays it is a reality. Of course, some individual components, for example, such OS specific thing like a file system, require a separate implementation. The delivery model through NuGet allows erasing these differences. For developers, this is a single API, that runs on different platforms, they do not need to take care of it, because the package already contains different implementations for each of the environments.

In our company, we have a couple of successful ASP.NET Core projects, within which our team is using different OS platforms: the frontend team uses Linux OS for development, one of our backend developers uses MacOS, and another two developers work on Windows machines. And all works like swiss mechanism, without a hitch as if everyone had the same operating system. If a couple of years ago it would seem as something unbelievable, nowadays it is a reality.

Container support. The modularity and lightweight nature of .NET Core makes it perfect for containers. It is optimized for cloud-specific workloads, and Microsoft Azure even has support for deploying your application to containers and Kubernetes. When you create and deploy a container, its image is far smaller with .NET Core than with .NET Framework. And also, it fits best with the containers philosophy and style of working.

.NET Core is open-source. There are a lot of advantages in using the open-source platform – it is more transparent, you have more control in using and changing it and it obviously provides a stronger ecosystem.

High performance. .NET Core is also the fastest .NET ever. And the best part about this – you do not need to change your code. The compiler will, by nature, optimize your code with the new language enhancements. The new Kestrel web server was redesigned from scratch to take advantage of asynchronous programming models, to be more lightweight, and fast. The combination of Kestrel & ASP.NET Core has been shown to be many times faster.

This is just a very quick overview of all the goodness that .NET Core has.

In this article, we tried to explain some details of Docker and .NET Core platform, show the advantages of using Docker with .NET Core and help developers to choose the right platform to develop. To sum up, the following decision table, provided by Microsoft, summarizes whether to use .NET Framework or .NET Core. Remember that for Linux containers, you need Linux-based Docker hosts (VMs or servers) and that for Windows Containers you need Windows Server based Docker hosts (VMs or servers).

| Architecture / App Type | Linux containers | Windows Containers |

|---|---|---|

| Microservices on containers | .NET Core | .NET Core |

| Monolithic app | .NET Core | .NET Framework .NET Core |

| Best-in-class performance and scalability | .NET Core | .NET Core |

| Windows Server legacy app (“brown-field”) migration to containers | – | .NET Framework |

| New container-based development (“green-field”) | .NET Core | .NET Core |

| ASP.NET Core | .NET Core | .NET Core (recommended) .NET Framework |

| ASP.NET 4 (MVC 5, Web API 2, and Web Forms) | – | .NET Framework |

| SignalR services | .NET Core 2.1 or higher version | .NET Framework .NET Core 2.1 or higher version |

| WCF, WF, and other legacy frameworks | WCF in .NET Core (only theWCF client library) | .NET Framework WCF in .NET Core (only the WCF client library) |

| Consumption of Azure services | .NET Core (eventually all Azure services will provide client SDKs for .NET Core) | .NET Framework .NET Core (eventually all Azure services will provide client SDKs for .NET Core) |

About Redwerk

Our team includes ASP.NET programmers who have solid experience in creating apps on Microsoft-related platforms. As a software development outsource model agency, we provide complete dev services concerning E-commerce, Business Automation, E-health, Media & Entertainment, E-government, Game Development, Startups & Innovation. More than a decade background with hundreds of successful projects – it is Microsoft software development company Redwerk.

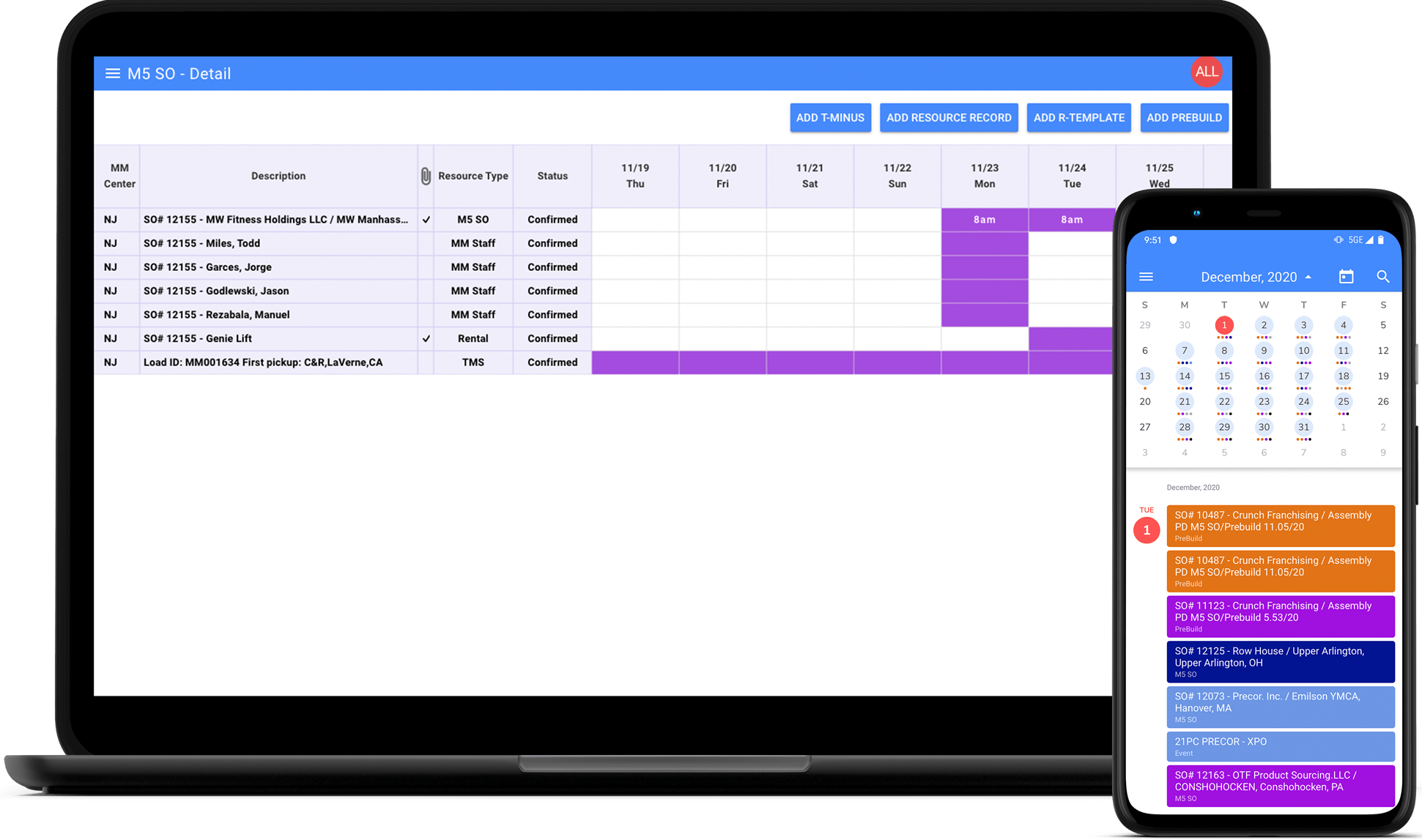

See how we developed a solution for clear-cut fitness logistics using .NET framework