Microsoft (MS) Azure is a cloud computing service provided by Microsoft, which counts over 600 services. In this article, we are going to talk about one of them – MS Azure Cognitive Services.

What are Azure Cognitive Services?

Let us tell you a little about what they are. Azure Cognitive Services are APIs, SDKs, and services, which were developed for enabling the ability to build applications with intelligent algorithms without needing to build them from scratch. The best part is that you can use them for free, though with some limitations.

If you just playing around with these services in order to find an appropriate one for yourself – you will be glad to hear that you can try out nearly all of these services on the MS Azure website – and this is a really great opportunity!

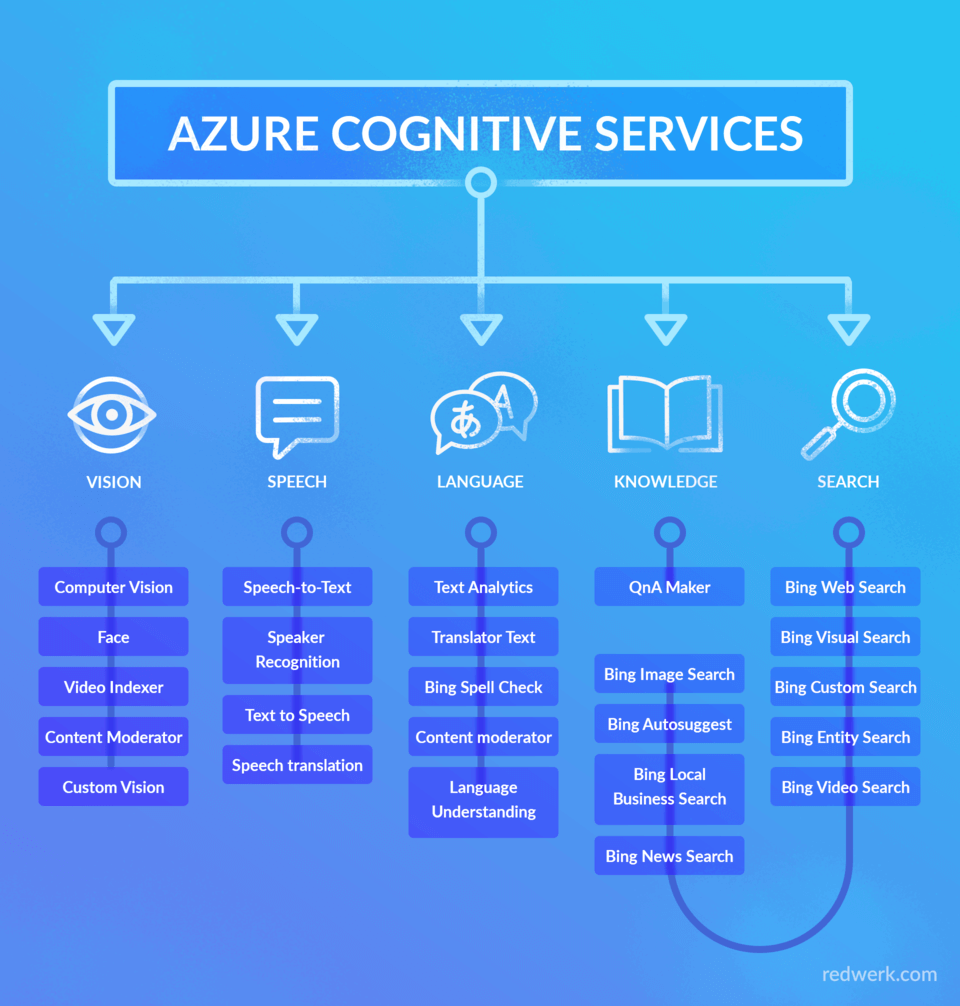

There are five categories of these services: vision, speech, language, knowledge, and search, as it is shown in the scheme below.

These five so-called pillars summarize the variety of the provided functionality and contain more categories and subcategories. In this article, we will share some more details about each of them to give you an overview of Azure Cognitive Services and what opportunities they provide.

Vision

Let’s start with Vision. This set of APIs provides an ability to accurately identify and analyze content within images and/or videos, and it corresponds to various needs. The categories, which are included are Computer Vision, Face, Video Indexer, Content Moderator, and Custom Vision. We will describe all of them in detail, and show you how it works in a real-case scenario.

Using Computer Vision API you will be able to analyze your images and get information about the image itself and its visual content: tags and description in four languages, adult and racy content scores and so on. Also, there are two APIs which allow you to recognize words and even handwritten text from these images. As for the videos, you can analyze them in close to real time by extracting frames of the video from your device and get a text description of the video content.

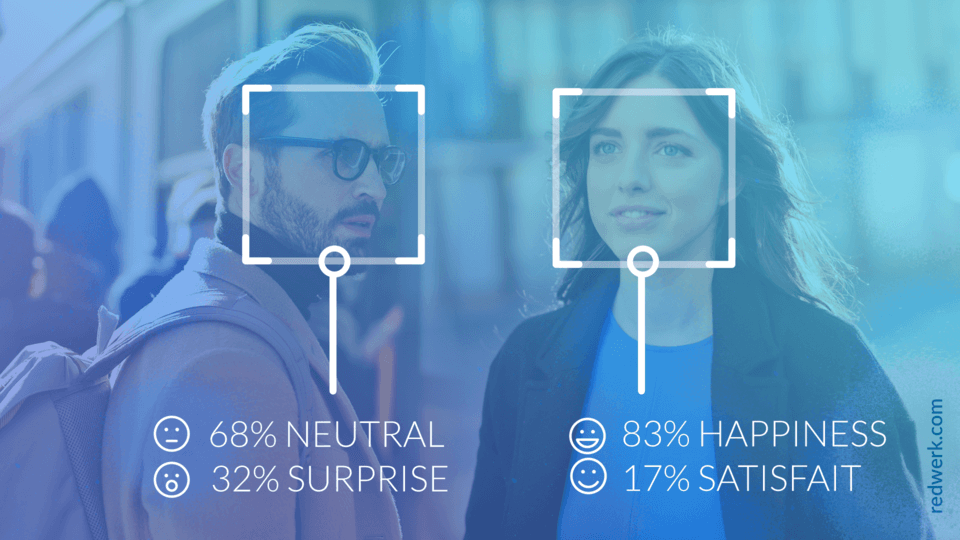

If you ever wanted to use Face Detection and/or Verification in your applications, so the Face API is for you. With it, you will be able to check the probability that two faces belong to the same person, detect faces on the image with some descriptions of their owners’ appearance or detect such emotions as anger, contempt, disgust, fear, happiness, neutral, sadness, and surprise.

Azure Video Indexer is a cloud application built on Azure Media Analytics, Azure Search and several Cognitive Services such as the Face API, Microsoft Translator, the Computer Vision API, and Custom Speech Service. It contains a lot of functionality and to employ it, you do not need to be a developer, you can use the Video Indexer web portal to use up the service without writing a single line of code. For now, it can convert speech to text in 10 languages, give you some statistics for speakers speech ratios, identify sentiments and emotions like joy, sadness, anger, or fear, moderate content by detecting adult and/or racy visuals, identify audio effects like silence, hand claps and so on. You also can use this API to trigger workflows or automate tasks by detecting some phrases or visual effects.

The Azure Content Moderator API is a cognitive service that provides potential to check texts, images, and videos content for discovering unwanted content. In case, if such material is found, the content is marked with labels, which your application then should handle. Except machine detecting, you also can choose a hybrid content moderation process called “Human review tool” in the event that the prediction should be tempered with a real-world context.

The last one of them is Custom Vision (please note that at the moment of writing, this feature is in preview mode). The Azure Custom Vision API is a little bit more complicated than Computer Vision, and allows you to create your own classifications. This means that you will need to train the algorithm on your set of images with their correct tags. While training, the algorithm calculates its own accuracy by testing itself on that same data. After that, you can test, retrain, and finally use it to classify new images according to the needs of your app, or you can export the model for offline use. This training you can conduct either through a web-based interface or using a set of SDKs.

The second category we are going to talk about is Speech, which provides such popular features as speech-to-text (also called speech recognition or transcription), text-to-speech (speech synthesis), and speech translation. You can use speech transcription for converting spoken audio to text either from the recorded files or in real-time. The service can return you intermediate results of the words that have been recognized and automatically detect the end of the speech. The speech recognition enables you to build voice-triggered smart apps, as well as provides the personality verification or speaker identification.

The text-to-speech API endows with functionality to translate your text back to the audio nearly indistinguishable as if it was a real human. It supports more than 75 voices in more than 45 languages and locales.

The Speech Service API allows you to add a translation of speech to your applications for both speech-to-speech and speech-to-text translation. As it is mentioned in Microsoft documentation, the Speech Translation API uses the same technologies that power various Microsoft products and services. This service is already being used by thousands of businesses worldwide in their applications and workflows.

Language

Language consists of such APIs as Text Analytics, Translator Text, Bing Spell Check, Content Moderator (which is also a part of “Vision”), and Language Understanding.

With Text Analytics API you can analyze your text to identify the language, sentiment, key phrases, and entities of it. Translator API is a neural machine translation service that provides you with the ability of text-to-text language translation in more than 60 languages.

Need some spell check in your application with taking into account a context? Then, you will be glad to hear that Bing Spell Search API empowers you with such option, including using specific corrections for documents (adds capitalization, basic punctuation, etc. for document creation) and web search (is more “aggressive” in order to return better search results).

And the Language Understanding (LUIS) – it is a cloud-based API service that can help you to analyze user’s conversational, natural language text and provide its overall meaning and detailed information. A common client application for LUIS is a chatbot, but it also can be used for social media applications, chatbots, speech-enabled desktop applications and so on.

Knowledge

Knowledge API consists only of Q&A Maker – cloud-based API service, which provides great functionality to create a question-and-answer service from the semi-structured content as FAQ documents, URLs, and product manuals. You can use it via the bot or application to provide your users’ with the opportunity to get split-second answers without the need to read FAQ or wait for someone to answer. The service will answer by matching a question with the best possible answer from the Q&As in your Knowledgebase.

Search

This set of APIs makes it possible to create applications providing powerful, Web-scale search without ads. All of the APIs have their own categories like video search or web search, and the like, and depending on it, provides a different type of searches, which can even make your application narrowly focused on your needs. Among them, you can find such types of search as news, video, images search, full web search without the ad, autosuggest or even visual search by image visual content.

Definitely, each of these services can be, and without a doubt, they all deserve it, described in a stand-alone article with in-depth overview and examples. However, allow for induction nature of the given article, we decided to select two of them and show a few examples of the use. So, let us practice a little with emotion recognition and speech transcription API.

Emotion recognition

If you ever tried to review this theme by yourself, you, probably, heard about Azure Emotion API, which was used for emotion recognition before. On February 15, 2019, this API will be deprecated, that is why in this paragraph we will review this capability as part of Face detection API, which will replace it.

Prerequisites:

- Face API subscription key. There are few ways to get it: one of them is to use the trial key, which you can get from the Microsoft “Try Cognitive Services” page or create a Cognitive Service account. For the second option, you will also need an Azure subscription. Do not be scared of this, Azure provides a great opportunity to create a free account and use free tier plans for developer’s needs.

- In the given example a .NET Core will be used, so you can apply any to your taste IDE. You can also create a project with .NET Framework, in this case, you will need Visual Studio 2015 or 2017.

- An image with format JPEG, PNG, GIF (will be used the first frame), or BMP with file size from 1KB to 6MB. Up to 64 faces can be returned for an image if their size is 36×36 to 4096×4096 pixels. There are some cases, when faces may not be detected because of the large face angles or wrong image orientation – take it into account, when choosing an image for analyzing.

In IDE create a new Console App (.NET Core) and name it “CognitiveServicesEx” (or whatever you want, just keep it in mind, then you will copy the code from the example). We will use “Newtonsoft.Json” NuGet package for showing a response, so right click on the project name and select from the context menu “Manage NuGet packages…”. Find in the list this package and install. After that, replace in the created project file “Program.cs” content with the next code:

using Newtonsoft.Json.Linq;

using System;

using System.IO;

using System.Net.Http;

using System.Net.Http.Headers;

namespace CognitiveServicesEx {

class Program {

static void Main(string[] args) {

GetEmotionsFromLocalImage();

Console.ReadLine();

}

static async void GetEmotionsFromLocalImage() {

string imageFilePath =

@"D:\practise\CognitiveServicesExample\CognitiveServicesEx\Resources\IMG_1556.jpg";

if (File.Exists(imageFilePath)) {

try {

Console.WriteLine("\nAnalyzing....\n");

HttpClient client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key",

"25eb4c2f2d0e41eda1985b8a13c56e30");

string requestParameters = "returnFaceId=true" +

"&returnFaceLandmarks=false" +

"&returnFaceAttributes=smile,emotion";

string uri =

$"https://westcentralus.api.cognitive.microsoft.com" +

$"/face/v1.0/detect?{requestParameters}";

HttpResponseMessage response;

using (FileStream fileStream = new FileStream(imageFilePath,

FileMode.Open,

FileAccess.Read)) {

BinaryReader binaryReader = new BinaryReader(fileStream);

byte[] byteData = binaryReader.ReadBytes((int)fileStream.Length);

using (ByteArrayContent content = new ByteArrayContent(byteData)) {

content.Headers.ContentType =

new MediaTypeHeaderValue("application/octet-stream");

response = await client.PostAsync(uri, content);

string contentString = await response.Content.ReadAsStringAsync();

Console.WriteLine("\nResponse:\n");

Console.WriteLine(JToken.Parse(contentString).ToString());

Console.WriteLine($"\nPress any key to exit...\n");

}

}

}

catch (Exception e) {

Console.WriteLine($"\n{e.Message}\nPress any key to exit...\n");

}

}

else {

Console.WriteLine("\nFile does not exist.\nPress any key to exit...\n");

}

}

}

}

In IDE create a new Console App (.NET Core) and name it “CognitiveServicesEx” (or whatever you want, just keep it in mind, then you will copy the code from the example). We will use “Newtonsoft.Json” NuGet package for showing a response, so right click on the project name and select from the context menu “Manage NuGet packages…”. Find in the list this package and install. After that, replace in the created project file “Program.cs” content with the next code:

Before running this example, we will break this piece of code down for you. This example works with the locally stored image, the path to which we set in the imageFilePath variable. You can also work with image URLs and we will review this example a little bit later.

In “requestParameters” variable we define what exactly information we need. Via this optional parameters, we can also get age, gender, head pose (headPose parameter), facial hair (facialHair parameter), glasses, hair, makeup, occlusion, accessories, blur, exposure, and noise. Each of these parameters can be added to “requestParameters” variable, divided by the comma. We will review the example only emotions and smile parameters.

As for the link to the API, please note, that you must use the same region as you used to get a subscription key. Free trial subscription keys are always generated in the “westus” region, so, if you use such keys right now – you do not need to worry.

Now, run the application. For each type of emotion you will see a number from 0 to 1, the larger this number, the stronger this emotion is manifested by a person. To test we will use the photo from our Halloween corporate party:

The image analysis results are as follows:

Analyzing….

Response:

[{

"faceId": "8fb67a9c-e9f8-47b3-9524-865fc85f1550",

"faceRectangle": {

"top": 512,

"left": 1498,

"width": 817,

"height": 817

},

"faceAttributes": {

"smile": 0.677,

"emotion": {

"anger": 0.0,

"contempt": 0.001,

"disgust": 0.0,

"fear": 0.0,

"happiness": 0.677,

"neutral": 0.322,

"sadness": 0.0,

"surprise": 0.0

}

}

},

{

"faceId": "5b09a37d-5837-4ee7-a7f7-83f3c7d170e4",

"faceRectangle": {

"top": 815,

"left": 965,

"width": 520,

"height": 520

},

"faceAttributes": {

"smile": 0.995,

"emotion": {

"anger": 0.001,

"contempt": 0.0,

"disgust": 0.0,

"fear": 0.002,

"happiness": 0.995,

"neutral": 0.0,

"sadness": 0.0,

"surprise": 0.001

}

}

}]

Press any key to exit…

A successful call returns an array of face entries ranked by face rectangle size in descending order. Each face entry contains the following values (depending on query parameters):

- faceId – the unique identifier of the detected face ( expires in 24 hours after the detection call);

- faceRectangle – a rectangle coordinates of the face location;

- faceAttributes:

- smile: smile intensity (a number between 0 and 1);

- emotion: including a value of neutral, anger, contempt, disgust, fear, happiness, sadness and surprise emotions intensity (a number between 0 and 1).

You can review a list of error codes and messages in Microsoft documentation, most of them are caused by image size, invalid arguments or links to images.

As we have mentioned above, we would review how the REST call should look like, if you needed to analyze the image by URL. Simple as that, you just need to add URL parameter as a HttpContent for the PostAsync method. So, the “GetEmotionsFromLocalImage” method, in this case, will look like this:

static async void GetEmotionsFromLocalImage() {

string imageFilePath =

@"D:\practise\CognitiveServicesExample\CognitiveServicesEx\Resources\IMG_1556.jpg";

if (File.Exists(imageFilePath)) {

try {

Console.WriteLine("\nAnalyzing....\n");

HttpClient client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key",

"25eb4c2f2d0e41eda1985b8a13c56e30");

string requestParameters = "returnFaceId=true" +

"&returnFaceLandmarks=false" +

"&returnFaceAttributes=smile,emotion";

string uri =

$"https://westcentralus.api.cognitive.microsoft.com" +

$"/face/v1.0/detect?{requestParameters}";

HttpResponseMessage response;

var data = new JObject {

["url"] =

$"https://docs.microsoft.com" +

$"/en-us/azure/cognitive-services/face/images/facefindsimilar.queryface.jpg"

};

var json = JsonConvert.SerializeObject(data);

var content = new StringContent(json, Encoding.UTF8, "application/json");

response = await client.PostAsync(uri, content);

string contentString = await response.Content.ReadAsStringAsync();

Console.WriteLine("\nResponse:\n");

Console.WriteLine(JToken.Parse(contentString).ToString());

Console.WriteLine($"\nPress any key to exit...\n");

}

catch (Exception e) {

Console.WriteLine($"\n{e.Message}\nPress any key to exit...\n");

}

}

else {

Console.WriteLine("\nFile does not exist.\nPress any key to exit...\n");

}

}

As you can see, the work with this API is a piece of cake. Microsoft provides very powerful services, which are really easy to use. Now, you can experiment by yourself with other optional parameters to get a full review of how it works and what capabilities it provides.

Speech transcription

The Speech to Text API provides an opportunity to to convert any spoken audio (like a recorded file, audio from the microphone, etc) to text. In our example, we will try to transcript recorded sample audio to text.

Prerequisites:

- a Speech API subscription key. The ways to get it are the same as for the Vision API subscription key.

- IDE prerequisites are also the same as for the Speech API.

- We will review transcription of the recorded file through REST API, which should be on the next format

- WAV, codec: PCM, bitrate: 16-bit, sample rate: 16 kHz, mono, duration up to 10 seconds;

- OGG, codec: OPUS, bitrate: 16-bit, sample rate: 16 kHz, mono, duration up to 10 seconds.

The above requirements for the file only valid for the REST API and WebSocket in the Speech Service. If you need to recognize longer audio, use the Speech SDK or batch transcription. The Speech SDK currently only supports the WAV format with PCM codec and you should take it into account when use SDK. In this article, we decided to use REST API, because SDK is usually more easy to use, and also it supports a limited number of languages. In a counterweight, the REST API works with any language that can make HTTP request and that is why these examples can be more useful.

Let us modify a little our test program.

using Newtonsoft.Json.Linq;

using System;

using System.IO;

using System.Net.Http;

using System.Net.Http.Headers;

namespace CognitiveServicesEx {

class Program {

static void Main(string[] args) {

Console.WriteLine("Choose what to analyze from the following list:");

Console.WriteLine("\t1 - Image");

Console.WriteLine("\t2 - Audio");

switch (Console.ReadLine()) {

case "1":

GetEmotionsFromLocalImage();

break;

case "2":

ConvertAudioToText();

break;

}

Console.ReadLine();

}

static async void GetEmotionsFromLocalImage() {

string imageFilePath = @"D:\practise\CognitiveServicesExample\CognitiveServicesEx\Resources\IMG_1551.jpg";

string subscriptionKey = "25eb4c2f2d0e41eda1985b8a13c56e30";

string requestParameters = "returnFaceId=true&returnFaceLandmarks=false&returnFaceAttributes=smile,emotion";

string uri = $"https://westcentralus.api.cognitive.microsoft.com/face/v1.0/detect?{requestParameters}";

if (File.Exists(imageFilePath)) {

try {

Console.WriteLine("\nAnalyzing....\n");

HttpClient client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", subscriptionKey);

HttpResponseMessage response;

using (FileStream fileStream = new FileStream(imageFilePath, FileMode.Open, FileAccess.Read)) {

BinaryReader binaryReader = new BinaryReader(fileStream);

byte[] byteData = binaryReader.ReadBytes((int)fileStream.Length);

using (ByteArrayContent content = new ByteArrayContent(byteData)) {

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

response = await client.PostAsync(uri, content);

string contentString = await response.Content.ReadAsStringAsync();

Console.WriteLine("Response:\n");

Console.WriteLine(JToken.Parse(contentString).ToString());

Console.WriteLine($"\nPress any key to exit...\n");

}

}

}

catch (Exception e) {

Console.WriteLine($"\n{e.Message}\nPress any key to exit...\n");

}

}

else {

Console.WriteLine("\nFile does not exist.\nPress any key to exit...\n");

}

}

static async void ConvertAudioToText() {

string audioFilePath = @"D:\practise\CognitiveServicesExample\CognitiveServicesEx\Resources\whatstheweatherlike.wav";

string authUri = "https://westus.api.cognitive.microsoft.com/sts/v1.0/issueToken";

string apiUri = "https://westus.stt.speech.microsoft.com/speech/recognition/conversation/cognitiveservices/v1?language=en-US&format=detailed";

string subscriptionKey = "1e907c0e08554d4aaab42f8e1f6939b0";

string token;

using (var client = new HttpClient()) {

Console.WriteLine("\nGet auth token....\n");

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", subscriptionKey);

UriBuilder uriBuilder = new UriBuilder(authUri);

var result = await client.PostAsync(uriBuilder.Uri.AbsoluteUri, null).ConfigureAwait(false);

token = await result.Content.ReadAsStringAsync().ConfigureAwait(false);

}

if (!String.IsNullOrEmpty(token)) {

Console.WriteLine("\nAnalyzing....\n");

try {

using (var client = new HttpClient()) {

using (var request = new HttpRequestMessage()) {

using (FileStream fileStream = new FileStream(audioFilePath, FileMode.Open, FileAccess.Read)) {

BinaryReader binaryReader = new BinaryReader(fileStream);

byte[] byteData = binaryReader.ReadBytes((int)fileStream.Length);

using (ByteArrayContent content = new ByteArrayContent(byteData)) {

request.Method = HttpMethod.Post;

request.RequestUri = new Uri(apiUri);

request.Content = content;

request.Headers.Add("Authorization", $"Bearer {token}");

request.Content.Headers.Add("Content-Type", "audio/wav");

using (var response = await client.SendAsync(request).ConfigureAwait(false)) {

response.EnsureSuccessStatusCode();

string contentString = await response.Content.ReadAsStringAsync();

Console.WriteLine("Response:\n");

Console.WriteLine(JToken.Parse(contentString).ToString());

Console.WriteLine($"\nPress any key to exit...\n");

}

}

}

}

}

}

catch (Exception e) {

Console.WriteLine($"\n{e.Message}\nPress any key to exit...\n");

}

}

else {

Console.WriteLine("\nAuth token not received.\nPress any key to exit...\n");

}

}

}

}

As you can see, we extended a little our program and add the ability for the user to choose what to analyze. Take a look at the method “ConvertAudioToText” – you will see that it contains two API URLs. Normally, for the speech-to-text REST API, you can use a subscription key, but there also exists another option (which is required and the only one available for the text-to-speech REST API) it is to use an Authorization header. Speech transcription was realized with the Authorization header to diversify examples and provide a wider review. When using the Authorization: Bearer header, you need to send out the request to the issueToken endpoint. It provides you a token, which will be valid for the audio analysis for the next 10 minutes. As the audio used the standard sample with the phrase “What’s the weather like” it was clearly recognized by API:

Choose what to analyze from the following list:

1 - Image

2 - Audio

2

Get auth token….

Analyzing….

Response:

{

"RecognitionStatus": "Success",

"Offset": 300000,

"Duration": 15900000,

"NBest": [{

"Confidence": 0.96764832735061646,

"Lexical": "what's the weather like",

"ITN": "what's the weather like",

"MaskedITN": "what's the weather like",

"Display": "What's the weather like?"

}]

}

Press any key to exit…

On our experience, the more clear sound (without noises, etc.) – the more accurate the result is.

Conclusion

Including artificial intelligence in solutions is becoming more and more popular these days. And Microsoft’s Azure is one of the giants, which provides vigorous AI-powered services useful in many areas, which are easy to implement and employ. If you are interested in using Azure Cognitive Services for your applications and need someone to assist you – contact us.

About Redwerk

Founded in 2005 Redwerk has grown up from a knot of enthusiasts to a two-headquarter agency that harbors 60+ digitally savvy individuals proficient in the use of modern technology and summoned to bring hi-tech industries to a brand new level. Employing only advanced tools we build fabulous and creative solutions for businesses of all sizes across the globe. Redwerk is a right place to outsource custom software development as our tech stack covers not only coding, but requirements analysis, architecture, UI/UX design, QA and testing, maintenance, system administration, and support. If you are looking for a dedicated team, custom-tailored approach and transparency, then Redwerk is that very one-stop shop where your project comes into being on time and within the pocket.