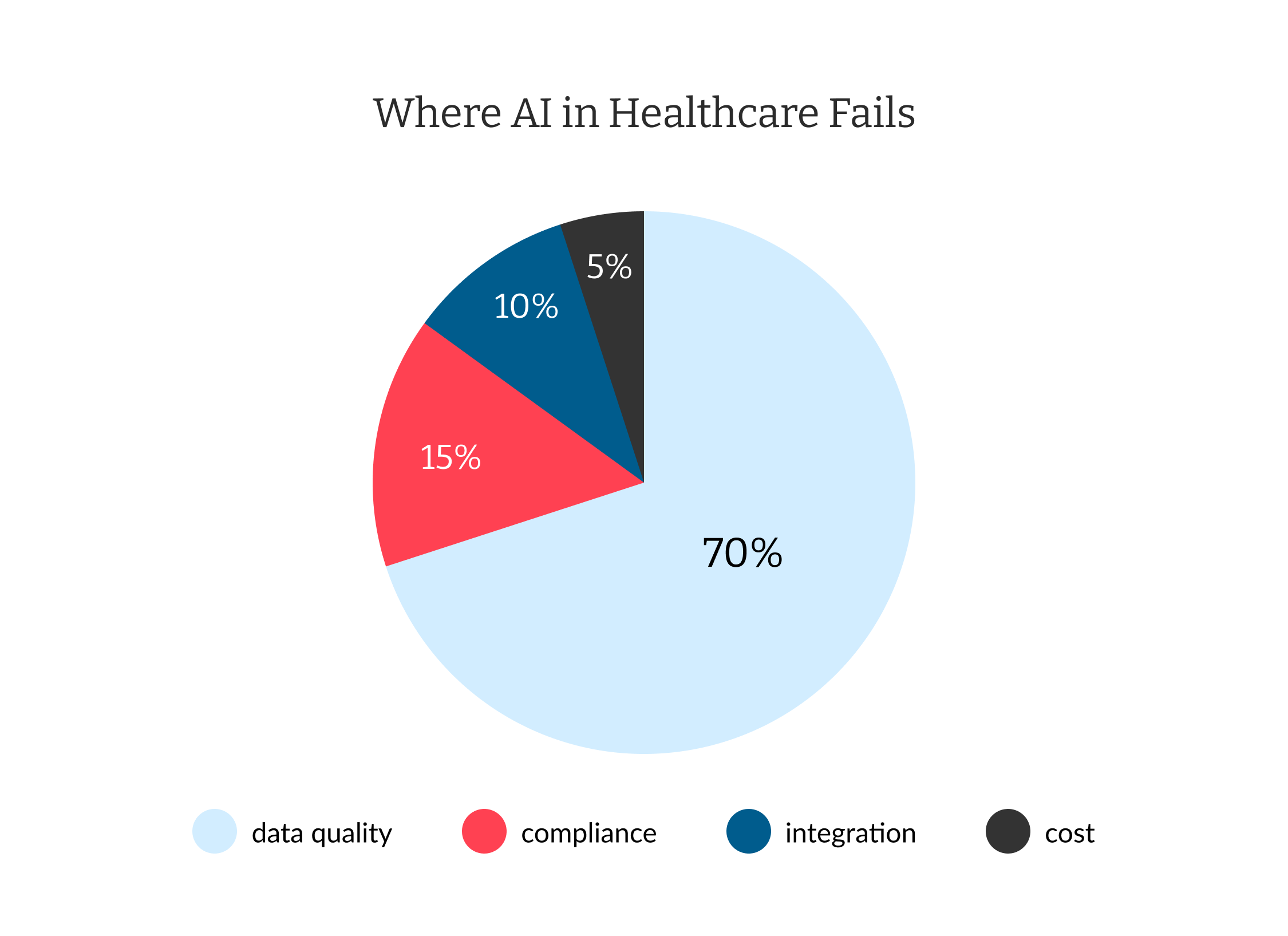

The promise of artificial intelligence (AI) in healthcare is immense: faster diagnosis, targeted treatments, and advanced preventive care. But for every headline about AI breakthroughs, there’s a silent backlog of failures—AI models gone wrong due to data quality, compliance oversights, and misunderstood accuracy metrics. As an artificial intelligence development company trusted by over 170 businesses across North America and Europe, we bring hands-on experience in developing healthcare and AI-powered solutions. In this article, we boldly address why AI in healthcare falls short and provide actionable, developer-tested strategies to avoid those pitfalls.

Fast Answers: Why Does AI in Healthcare Go Wrong?

AI promises to revolutionize healthcare, but the reality is marred by numerous obstacles that frequently cause AI projects to fail or underperform. Understanding the primary reasons behind these failures is crucial for healthcare technology leaders seeking to implement effective, safe, and compliant AI solutions. The biggest issues often boil down to data, compliance, and misplaced trust in AI’s reported accuracy.

- Incomplete or biased data can lead to medical errors, sometimes with severe consequences.

- Regulatory and compliance missteps can halt entire projects or produce unusable results.

- Over-trusting AI accuracy metrics can cause clinicians to miss red flags and rare conditions.

Let’s break down the real challenges—and how any healthcare technology leader can sidestep them.

The Data Weak Link: Garbage In, Garbage Out

The single biggest challenge with AI in healthcare is DATA. Even industry giants fail because their models are:

- Trained on incomplete or non-representative patient datasets

- Structured inconsistently across systems (EMRs, labs, imaging, billing)

- Riddled with human entry errors, duplicates, and outdated records

A 2025 World Economic Forum (WEF) report states that most AI failures in clinical trials are attributed to data quality issues.

Why this matters:

- AI may underperform or introduce new biases (e.g., misdiagnose minorities or rare disease populations).

- Incorrect predictions reduce trust, leading clinicians to discard insights and revert to manual workflows.

Real-World Example: A machine learning algorithm flagged pneumonia risk as lower for patients with asthma due to anomalies in the training data, contradicting established medical science and forcing a total model recall.

Compliance Nightmares: Security, Privacy, and Regulation

AI platforms must navigate a web of privacy and compliance standards (HIPAA, GDPR, and local laws for every deployment).

- Insecure data storage and sharing methods.

- Failing to anonymize, encrypt, or dust off legacy records properly.

- Lack of audit trails for model predictions and decision-making logic.

- Build compliance into your workflow from day one (automatic logging, trackable audit trails).

- Apply robust encryption and access controls, including zero-trust for sensitive models.

- Stay up-to-date with local privacy regulation changes—they can change deployment feasibility overnight.

Trust and Accuracy: When Good Metrics Are Not Enough

AI model accuracy ≠ clinical value. An average 95% “test set” accuracy may still hide life-threatening blind spots:

- Rare conditions: A model may predict well for common cases but fail spectacularly for rare or emerging diseases.

- Explainability: Clinicians demand not just “what” but “why”. Opaque models are quickly sidelined in real practice.

Best practice: Deploy a “clinician-in-the-loop” validation system, where doctors can override, question, and log feedback on each AI action. This approach not only catches model failures but also builds real-world trust rapidly.

Integration and Interoperability

Seamlessly connecting AI solutions with existing healthcare systems is critical for realizing their full potential. However, healthcare organizations often face significant hurdles due to the fragmentation of data environments and legacy IT infrastructure. The challenges of AI integration in healthcare revolve around overcoming data silos and achieving interoperability, both of which are essential to scale AI benefits across institutions while maintaining accuracy and regulatory compliance.

- Data silos: Many healthcare providers operate with proprietary formats and legacy databases, resulting in a lack of interoperability.

- Interoperability: Standards like HL7 FHIR help, but mapping real data is a labor-intensive process, especially when scaling AI across institutions.

Practical tip: Early investment in smart data integration prevents technical debt that can cripple validation, upgrades, or regulatory reporting.

Cost: More Than Just the Algorithm

AI in healthcare incurs significant costs that extend far beyond the development of the algorithm. Healthcare organizations must consider a range of factors, including infrastructure, compliance, ongoing maintenance, and training expenses, which can easily surpass initial development budgets and pose challenges to long-term success.

Implementing AI involves:

- Large upfront investment in infrastructure, tools, and skilled personnel (often out of reach for small-to-midsize providers).

- Ongoing costs for compliance, audits, retraining, and support.

- Failure to correctly budget or underestimate “hidden” costs has been the death knell for many promising pilots.

Advantages and Disadvantages of AI in Healthcare

How has AI impacted the health industry? By automating burdensome administrative and routine tasks like extensive note-taking and interpreting medical scans, AI frees healthcare professionals to reconnect with the human aspect of care. This fosters genuine healing, allowing doctors to truly listen and patients to feel heard. However, like any powerful technology, AI also presents its own set of challenges.

Early disease detection and faster diagnosis

Data quality and availability issues

Workflow and efficiency improvements

Privacy, security, and compliance complexity

Cost reduction in administration

Potential for algorithmic bias

Personalized care through predictive models

Clinical misinterpretation of results

Increased access via telemedicine, triage

High implementation and maintenance costs

Automated documentation and billing

Overreliance, reducing clinician oversight

Solving AI in Healthcare: A Practical Playbook

Innovating with AI in healthcare involves overcoming persistent challenges, including poor data quality, regulatory hurdles, and clinician adoption. The sustainability and success of any artificial intelligence solution in healthcare depend on tackling issues such as data integrity, privacy compliance, system interoperability, and ongoing model validation. By addressing these areas, organizations can maximize the benefits of AI technology in healthcare, reduce risks of errors, and accelerate adoption by clinical teams.

Below are actionable steps—each informed by direct experience and real-world proof—to ensure AI delivers on its promise for providers, patients, and payers alike.

1. Prioritize Data Quality

- Conduct thorough data audits before commencing any AI work.

- Utilize automated tools to identify and flag anomalies, duplicates, and missing values.

- Enforce standard vocabularies (SNOMED, LOINC) and automatically de-identify patient information.

2. Bake in Compliance

- Automate encryption and regular compliance checks.

- Maintain version-controlled, transparent audit logs for regulators and internal QA.

- Use synthetic datasets for R&D when patient privacy is a concern.

3. Foster Clinician Trust

- Involve clinicians from model specs through every validation cycle.

- Provide easy-to-understand model explanations—let doctors see why predictions happen.

- Set up mandatory periodic revalidation to ensure models adapt to new clinical realities.

4. Invest in Long-Term Integration

- Design for interoperability from the start—retrofitting costs more later.

- Collaborate openly with IT, clinical, and legal teams.

5. Monitor, Learn, and Adapt

- Capture feedback—successful AI deployments continually retrain and improve using both successes and flagged failures.

Our Practical Experience

When helping to build the PrideFit App, our team managed sensitive healthcare data, ensured GDPR compliance, and integrated explainable AI-driven fitness recommendations. These recommendations were tested in “real user” environments only after rigorous validation and QA. This app’s success underscores our commitment to secure integration, compliance, and actionable insights—all while delivering real-world value.

Ready to build a secure, high-impact app or tackle complex integrations? Contact our team today to discuss how we can deliver similar results for your project.

FAQ

What are the negative effects of AI on healthcare?

AI can introduce data privacy risks, algorithmic biases, and potential misdiagnoses if models are improperly validated or trained with poor-quality data.

Has AI made mistakes in healthcare?

Yes. Documented cases include faulty risk predictions resulting from incomplete training sets and the misclassification of patient groups, which led to clinical recalls and reevaluations.

What is the biggest challenge of AI in healthcare?

Data quality and regulatory compliance are the top hurdles—no model can succeed if it relies on bad data or violates patient privacy laws.

How can AI be used in healthcare?

AI supports diagnostics, automates billing, predicts patient risks, personalizes treatment, and enhances administrative efficiency.

What is the role of AI in healthcare?

AI’s role is to augment (not replace) clinicians—streamlining care, offering rapid insights, and reducing human errors—so long as data, compliance, and integration are actively managed.

See how we helped upgrade the European Parliament’s digital platform, solving compliance and data accuracy challenges